Introduction

Standard large language models respond to user queries by generating plain text. This is great for many applications like chatbots, but if you want to programmatically access details in the response, plain text is hard to work with. Some models have the ability to respond with structured JSON instead, making it easy to work with data from the LLM’s output directly in your application code. If you’re using a supported model, you can enable structured responses by providing your desired schema details to theresponse_format key of the Chat Completions API.

Supported models

The following newly released top models support JSON mode:openai/gpt-oss-120bopenai/gpt-oss-20bmoonshotai/Kimi-K2-Instructzai-org/GLM-5zai-org/GLM-4.5-Air-FP8MiniMaxAI/MiniMax-M2.5Qwen/Qwen3-Next-80B-A3B-InstructQwen/Qwen3.5-397B-A17BQwen/Qwen3-235B-A22B-Thinking-2507Qwen/Qwen3-Coder-480B-A35B-Instruct-FP8Qwen/Qwen3-235B-A22B-Instruct-2507-tputdeepseek-ai/DeepSeek-R1deepseek-ai/DeepSeek-R1-0528-tputdeepseek-ai/DeepSeek-V3meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8Qwen/Qwen2.5-VL-72B-Instruct

meta-llama/Llama-3.3-70B-Instruct-Turbodeepcogito/cogito-v2-preview-llama-70Bdeepcogito/cogito-v2-preview-llama-109B-MoEdeepcogito/cogito-v2-preview-llama-405Bdeepcogito/cogito-v2-preview-deepseek-671bdeepseek-ai/DeepSeek-R1-Distill-Llama-70Bdeepseek-ai/DeepSeek-R1-Distill-Qwen-14Bmeta-llama/Meta-Llama-3.1-8B-Instruct-Turbometa-llama/Llama-3.3-70B-Instruct-Turbo-FreeQwen/Qwen2.5-7B-Instruct-TurboQwen/Qwen2.5-Coder-32B-InstructQwen/QwQ-32Barcee-ai/coder-largemeta-llama/Llama-3.2-3B-Instruct-Turbometa-llama/Meta-Llama-3-8B-Instruct-Litemeta-llama/Llama-3-70b-chat-hfgoogle/gemma-3n-E4B-itmistralai/Mistral-7B-Instruct-v0.1mistralai/Mistral-7B-Instruct-v0.2mistralai/Mistral-7B-Instruct-v0.3arcee_ai/arcee-spotlight

Basic example

Let’s look at a simple example, where we pass a transcript of a voice note to a model and ask it to summarize it. We want the summary to have the following structure:JSON

response_format key.

Let’s see what this looks like:

JSON

Prompting the model

It’s important to always tell the model to respond only in JSON and include a plain‑text copy of the schema in the prompt (either as a system prompt or a user message). This instruction must be given in addition to passing the schema via theresponse_format parameter.

By giving an explicit “respond in JSON” direction and showing the schema text, the model will generate output that matches the structure you defined. This combination of a textual schema and the response_format setting ensures consistent, valid JSON responses every time.

Regex example

All the models supported for JSON mode also support regex mode. Here’s an example using it to constrain the classification.Reasoning model example

You can also extract structured outputs from some reasoning models such asDeepSeek-R1-0528.

Below we ask the model to solve a math problem step-by-step showing its work:

Python

JSON

Vision model example

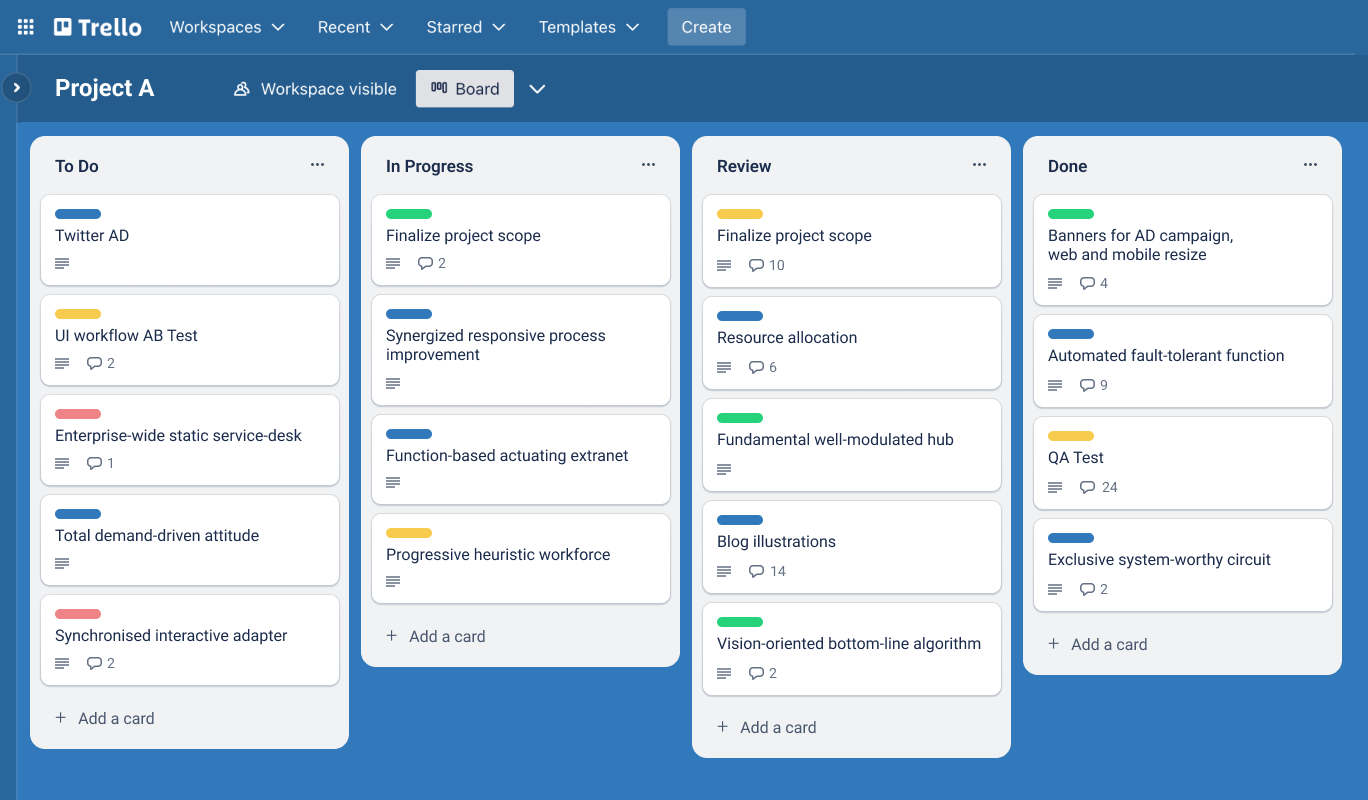

Let’s look at another example, this time using a vision model. We want our LLM to extract text from the following screenshot of a Trello board: In particular, we want to know the name of the project (Project A), and the number of columns in the board (4).

Let’s try it out:

In particular, we want to know the name of the project (Project A), and the number of columns in the board (4).

Let’s try it out:

JSON

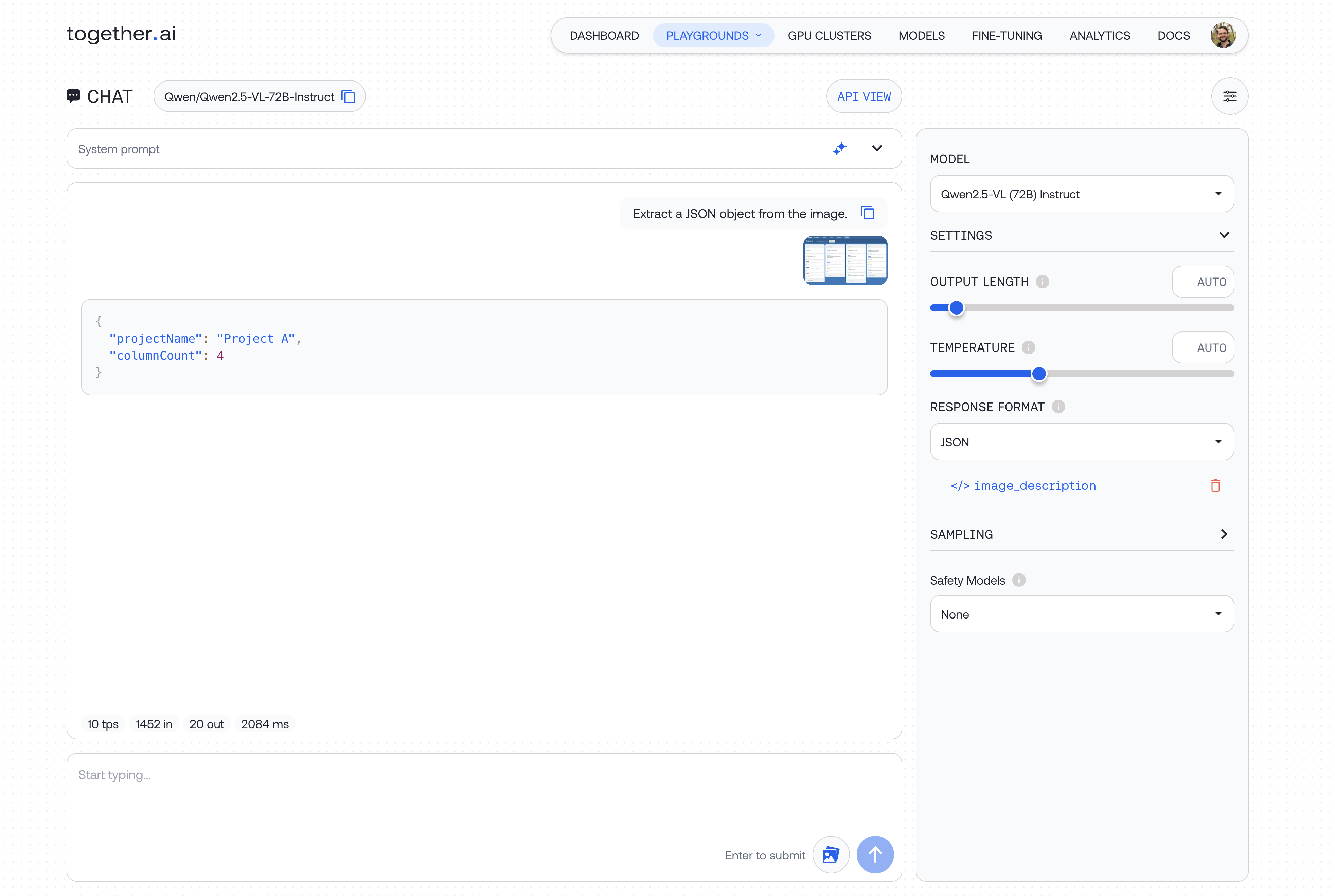

Try out your code in the Together Playground

You can try out JSON Mode in the Together Playground to test out variations on your schema and prompt: Just click the RESPONSE FORMAT dropdown in the right-hand sidebar, choose JSON, and upload your schema!

Just click the RESPONSE FORMAT dropdown in the right-hand sidebar, choose JSON, and upload your schema!