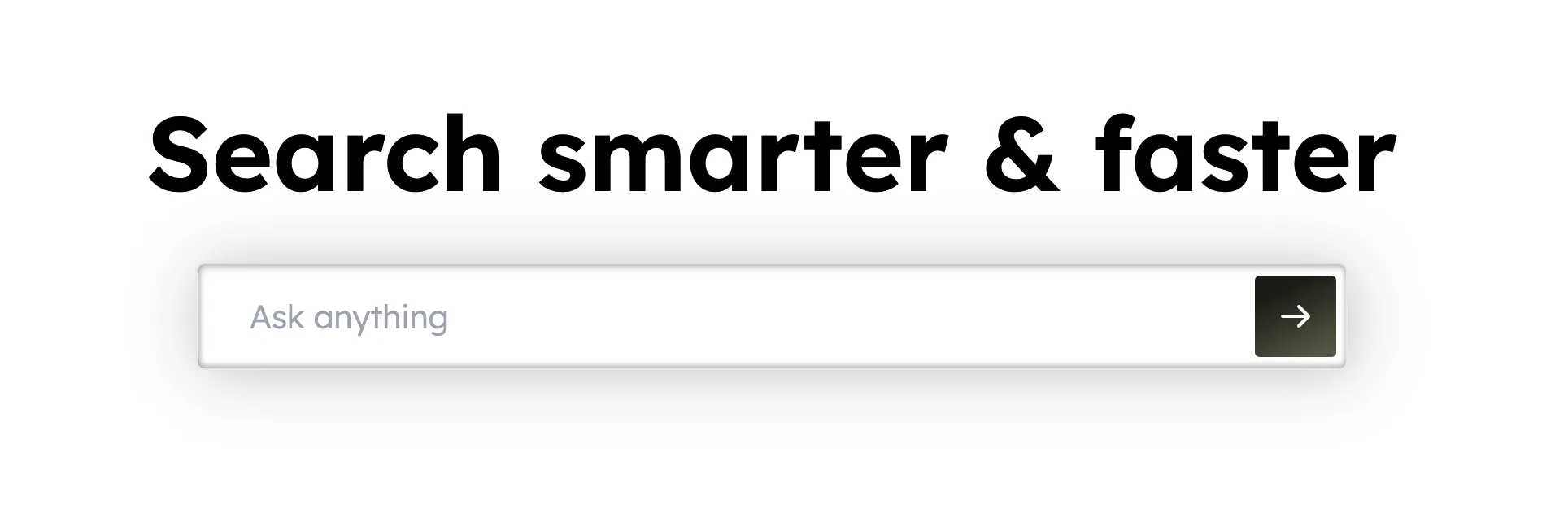

How to build an AI search engine (OSS Perplexity Clone)

How to build a full-stack AI search engine inspired by Perplexity with Next.js and Together AI

TurboSeek is an app that answers questions using Together AI’s open-source LLMs. It pulls multiple sources from the web using Bing’s API, then summarizes them to present a single answer to the user.

In this post, you’ll learn how to build the core parts of TurboSeek. The app is open-source and built with Next.js and Tailwind, but Together’s API can be used with any language or framework.

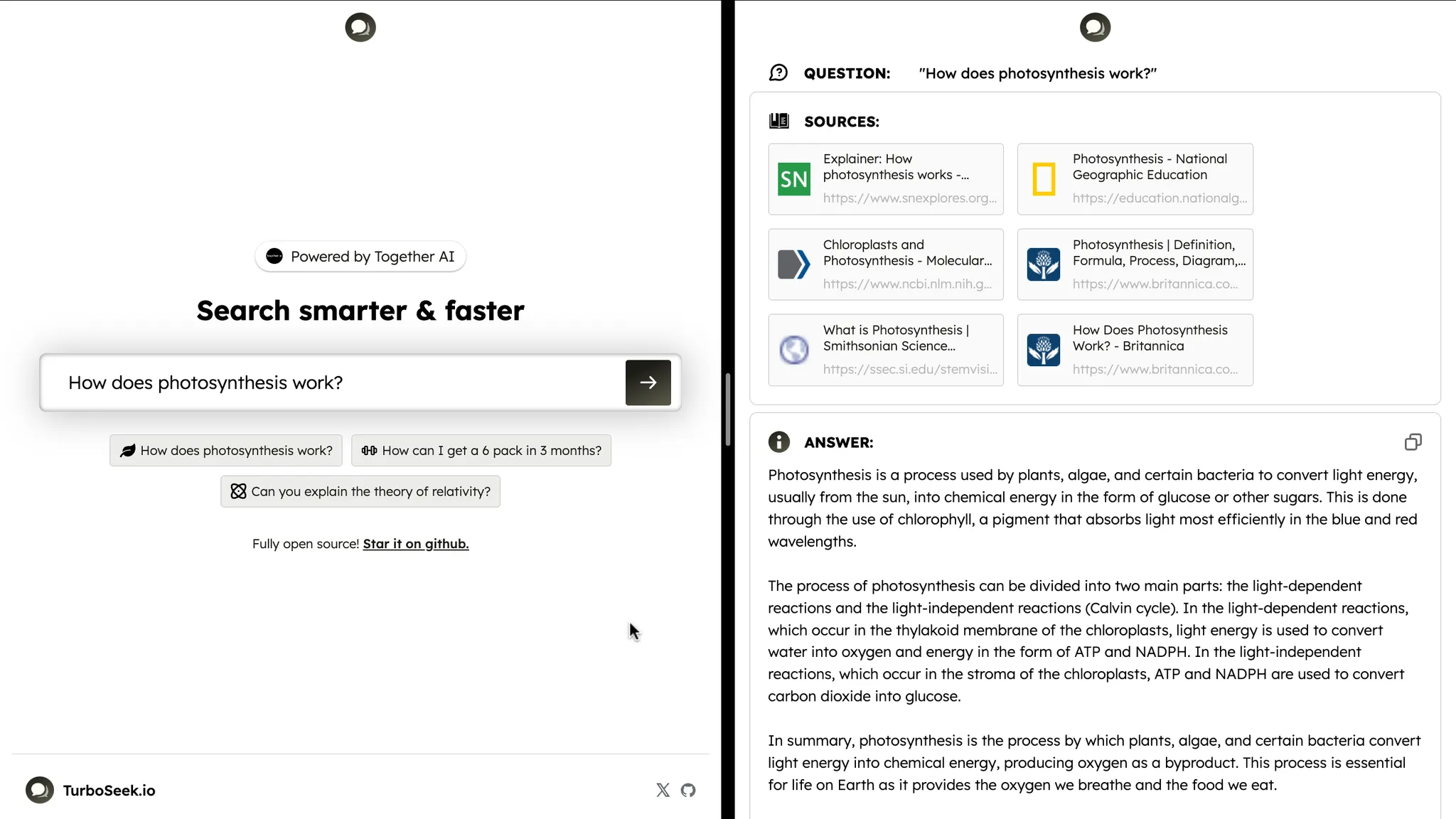

Building the input prompt

TurboSeek’s core interaction is a text field where the user can enter a question:

In our page, we’ll render an <input> and control it using some new React state:

// app/page.tsx

function Page() {

let [question, setQuestion] = useState('');

return (

<form>

<input

value={question}

onChange={(e) => setQuestion(e.target.value)}

placeholder="Ask anything"

/>

</form>

);

}

When the user submits our form, we need to do two things:

- Use the Bing API to fetch sources from the web, and

- Pass the text from the sources to an LLM to summarize and generate an answer

Let’s start by fetching the sources. We’ll wire up a submit handler to our form that makes a POST request to a new endpoint, /getSources :

// app/page.tsx

function Page() {

let [question, setQuestion] = useState("");

async function handleSubmit(e) {

e.preventDefault();

let response = await fetch("/api/getSources", {

method: "POST",

body: JSON.stringify({ question }),

});

let sources = await response.json();

// This fetch() will 404 for now

}

return (

<form onSubmit={handleSubmit}>

<input

value={question}

onChange={(e) => setQuestion(e.target.value)}

placeholder="Ask anything"

/>

</form>

);

}

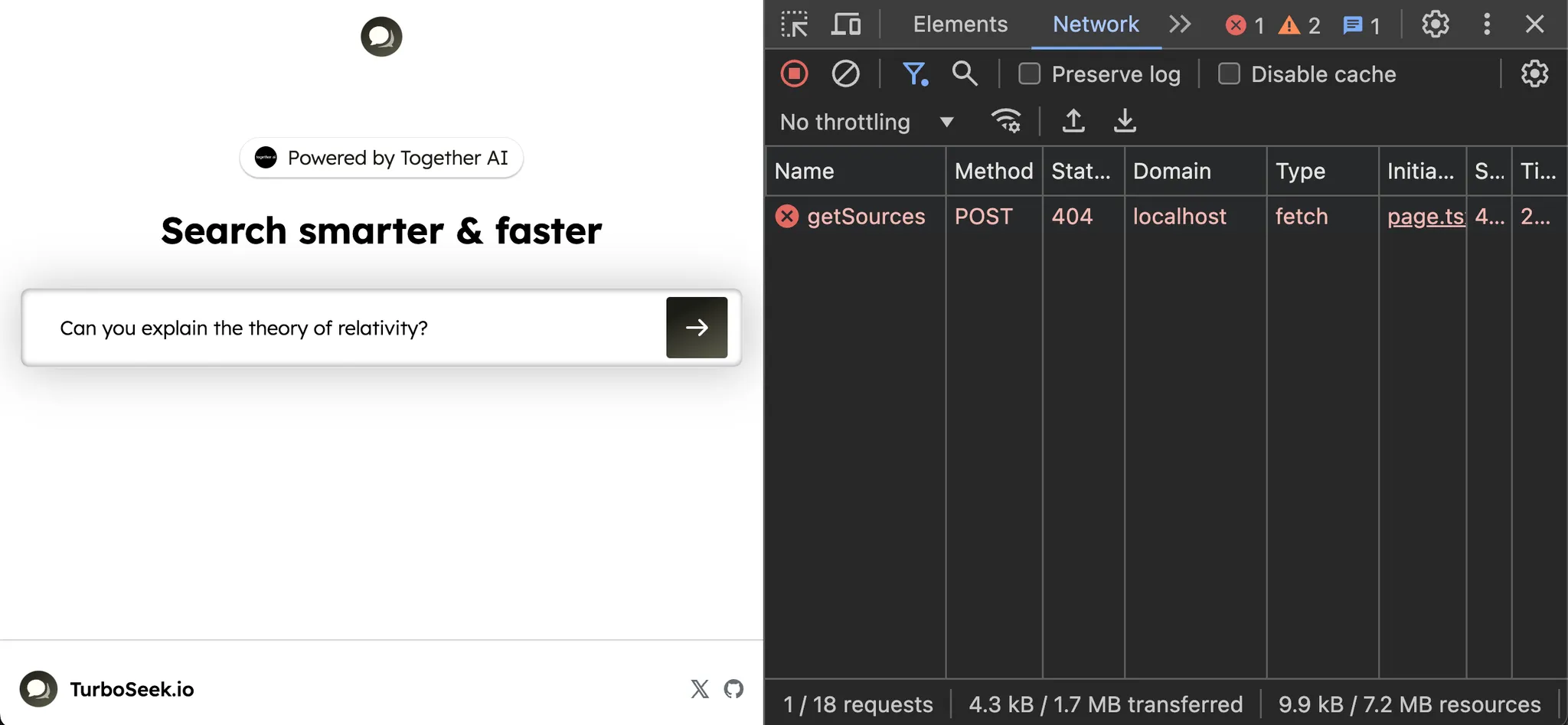

If we submit the form, we see our React app makes a request to /getSources :

Our frontend is ready! Let’s add an API route to get the sources.

Getting web sources with Bing

To create our API route, we’ll make a new app/api/getSources/route.js file:

// app/api/getSources/route.js

export async function POST(req) {

let json = await req.json();

// `json.question` has the user's question

}

We’re ready to send our question to Bing to return back six sources from the web.

The Bing API lets you make a fetch request to get back search results, so we’ll use it to build up our list of sources:

// app/api/getSources/route.js

import { NextResponse } from "next/server";

export async function POST(req) {

const json = await req.json();

const params = new URLSearchParams({

q: json.question,

mkt: "en-US",

count: "6",

safeSearch: "Strict",

});

const response = await fetch(

`https://api.bing.microsoft.com/v7.0/search?${params}`,

{

method: "GET",

headers: {

"Ocp-Apim-Subscription-Key": process.env["BING_API_KEY"],

},

},

);

const { webPages } = await response.json();

return NextResponse.json(

webPages.value.map((result) => ({

name: result.name,

url: result.url,

})),

);

}

In order to make a request to Bing’s API, you’ll need to get an API key from Microsoft. Once you have it, set it in .env.local:

// .env.local

BING_API_KEY=xxxxxxxxxxxx

and our API handler should work.

Let’s try it out from our React app! We’ll log the sources in our event handler:

// app/page.tsx

function Page() {

let [question, setQuestion] = useState("");

async function handleSubmit(e) {

e.preventDefault();

let response = await fetch("/api/getSources", {

method: "POST",

body: JSON.stringify({ question }),

});

let sources = await response.json();

// log the response from our new endpoint

console.log(sources);

}

return (

<form onSubmit={handleSubmit}>

<input

value={question}

onChange={(e) => setQuestion(e.target.value)}

placeholder="Ask anything"

/>

</form>

);

}

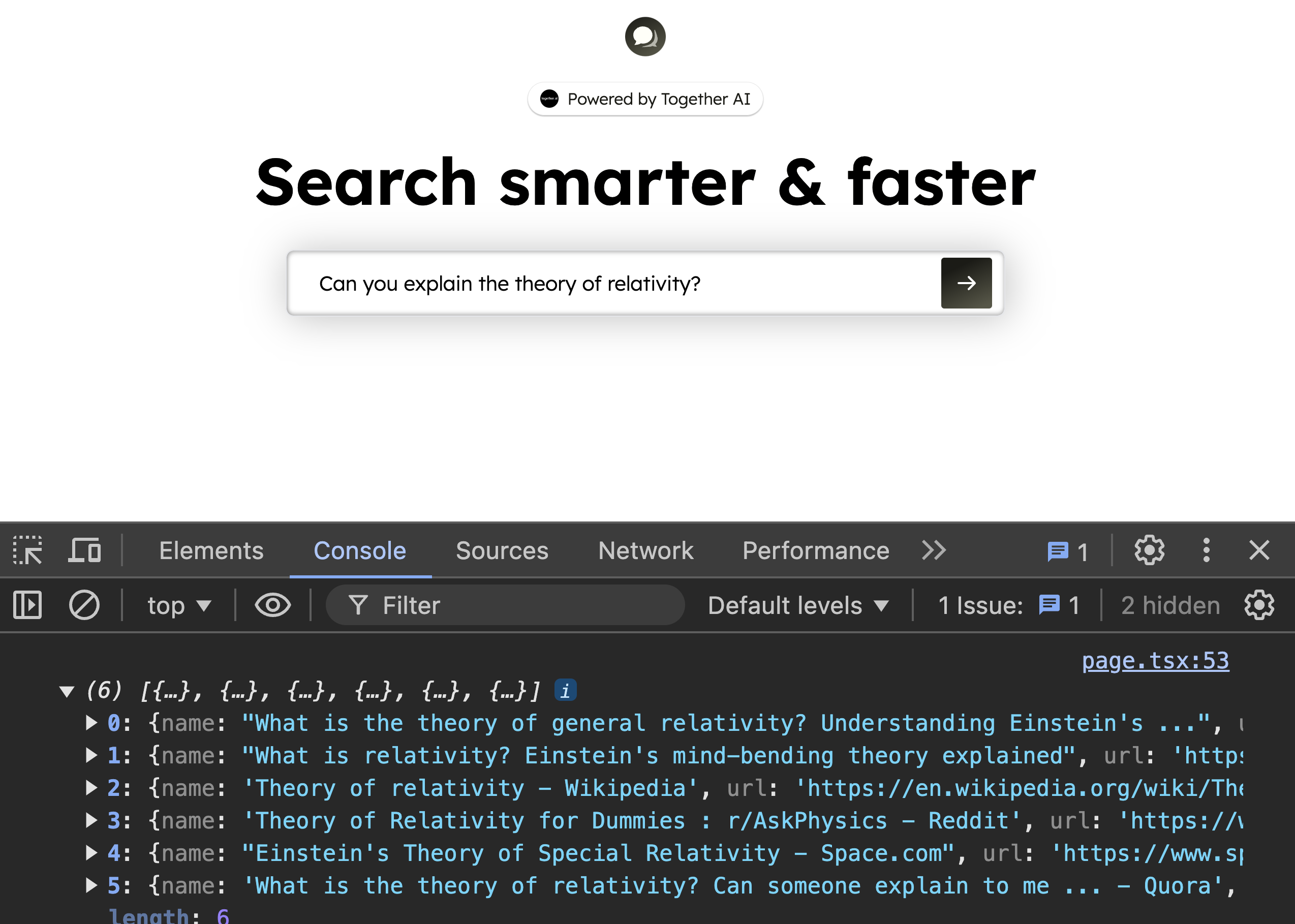

and if we try submitting a question, we’ll see an array of pages logged in the console!

Let’s create some new React state to store the responses and display them in our UI:

function Page() {

let [question, setQuestion] = useState("");

let [sources, setSources] = useState([]);

async function handleSubmit(e) {

e.preventDefault();

let response = await fetch("/api/getSources", {

method: "POST",

body: JSON.stringify({ question }),

});

let sources = await response.json();

// Update the sources with our API response

setSources(sources);

}

return (

<>

<form onSubmit={handleSubmit}>

<input

value={question}

onChange={(e) => setQuestion(e.target.value)}

placeholder="Ask anything"

/>

</form>

{/* Display the sources */}

{sources.length > 0 && (

<div>

<p>Sources</p>

<ul>

{sources.map((source) => (

<li key={source.url}>

<a href={source.url}>{source.name}</a>

</li>

))}

</ul>

</div>

)}

</>

);

}

If we try it out, our app is working great so far! We’re taking the user’s question, fetching six relevant web sources from Bing, and displaying them in our UI.

Next, let’s work on summarizing the sources.

Fetching the content from each source

Now that our React app has the sources, we can send them to a second endpoint where we’ll use Together to scrape and summarize them into our final answer.

Let’s add that second request to a new endpoint we’ll call /api/getAnswer, passing along the question and sources in the request body:

// app/page.tsx

function Page() {

// ...

async function handleSubmit(e) {

e.preventDefault();

const response = await fetch("/api/getSources", {

method: "POST",

body: JSON.stringify({ question }),

});

const sources = await response.json();

setSources(sources);

// Send the question and sources to a new endpoint

const answerResponse = await fetch("/api/getAnswer", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ question, sources }),

});

// The second fetch() will 404 for now

}

// ...

}

If we submit a new question, we’ll see our React app make a second request to /api/getAnswer. Let’s create the second route!

Make a new app/api/getAnswer/route.js file:

// app/api/getAnswer/route.js

export async function POST(req) {

let json = await req.json();

// `json.question` and `json.sources` has our data

}

Now that we have the data, we need to:

- Get the text from the URL of each source

- Pass all text to Together and ask for a summary

Let’s start with #1.

To scrape a webpage’s text from our API route, we’ll take this general approach:

async function getTextFromURL(url) {

// 1. Use fetch() to get the HTML content

// 2. Use the `jsdom` library to parse the HTML into a JavaScript object

// 3. Use `@mozilla/readability` to clean the document and

// return only the main text of the page

}

Let’s implement this new function. We’ll start by installing the jsdom and @mozilla/readability libraries:

npm i jsdom @mozilla/readability

Next, let’s implement the steps:

async function getTextFromURL(url) {

// 1. Use fetch() to get the HTML content

const response = await fetch(url);

const html = await response.text();

// 2. Use the `jsdom` library to parse the HTML into a JavaScript object

const virtualConsole = new jsdom.VirtualConsole();

const dom = new JSDOM(html, { virtualConsole });

// 3. Use `@mozilla/readability` to clean the document and

// return only the main text of the page

const { textContent } = new Readability(doc).parse();

}

Let’s try it out!

We’ll run our first source through our new getTextFromURL function:

// app/api/getAnswer/route.js

export async function POST(req) {

let json = await req.json();

let textContent = await getTextFromURL(json.sources[0].url);

console.log(textContent);

}

If we submit our form again, we’ll see the text show up in our server terminal from the first page!

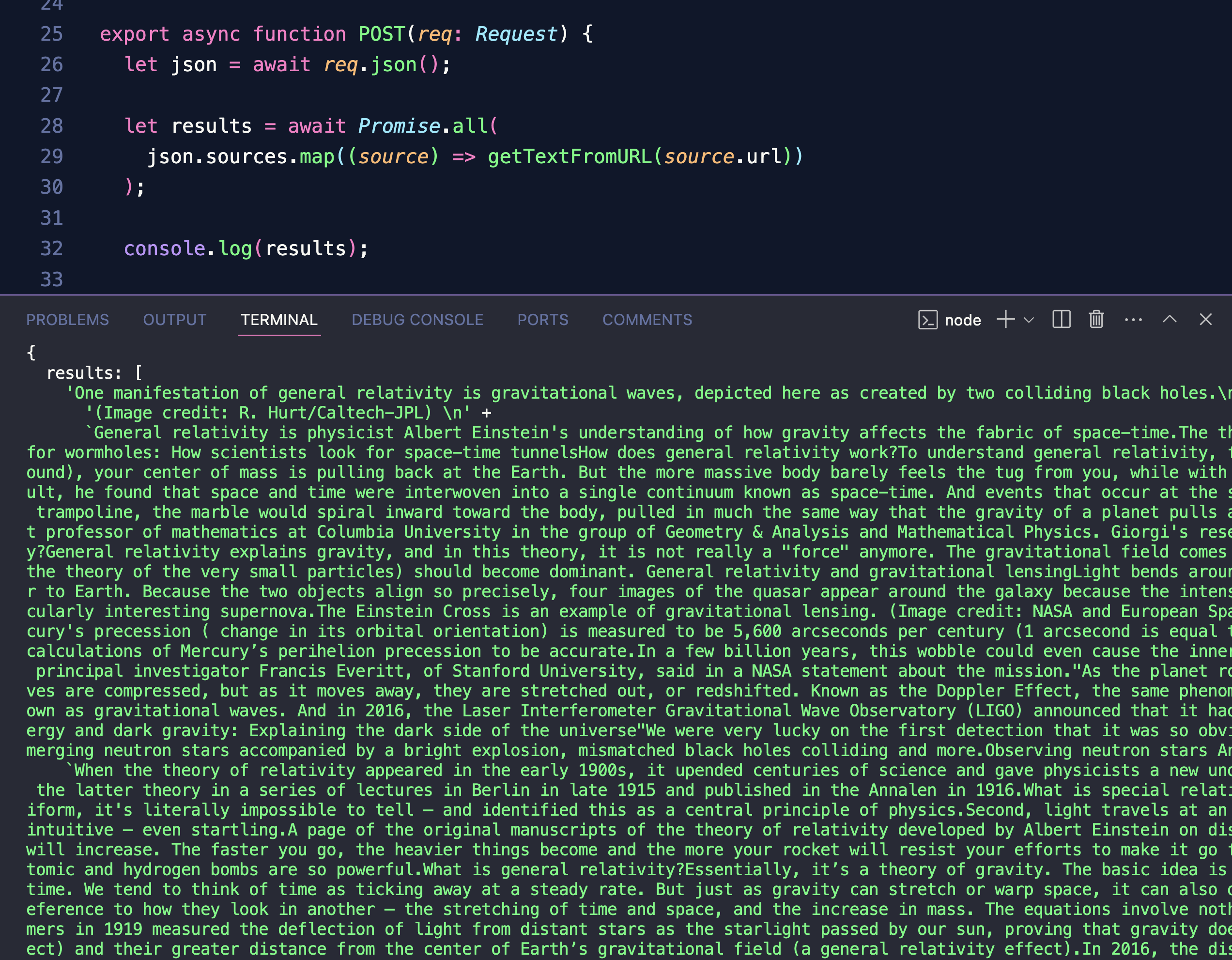

Let’s get the text from all six sources. Since each source is independent, we can use Promise.all to kick off our functions in parallel:

// app/api/getAnswer/route.js

export async function POST(req) {

let json = await req.json();

let results = await Promise.all(

json.sources.map((source) => getTextFromURL(source.url)),

);

console.log(results);

}

If we try again, we’ll now see an array of each web page’s text logged to the console:

We’re ready to pass the source text along to Together to get our final answer!

Summarizing the sources

Now that we have the text content from each source, we can pass it along with a prompt to Together to get a final answer.

Let’s install Together’s node SDK:

npm i together-ai

and use it to query Llama 3.1 8B Turbo:

import { Together } from "togetherai";

const together = new Together();

export async function POST(req) {

const json = await req.json();

// Fetch the text content from each source

const results = await Promise.all(

json.sources.map(async (source) => getTextFromURL(source.url)),

);

// Ask Together to answer the question using the results

const systemPrompt = `

Given a user question and some context, please write a clean, concise

and accurate answer to the question based on the context. You will be

given a set of related contexts to the question. Please use the

context when crafting your answer.

Here are the set of contexts:

<contexts>

${results.map((result) => `${result}\n\n`)}

</contexts>

`;

const runner = await together.chat.completions.stream({

model: "meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo",

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: json.question },

],

});

return new Response(runner.toReadableStream());

}

Now we’re read to read it in our React app!

Displaying the answer in the UI

Back in our page, let’s create some new React state called answer to store the text from our LLM:

// app/page.tsx

function Page() {

const [answer, setAnswer] = useState("");

async function handleSubmit(e) {

e.preventDefault();

const response = await fetch("/api/getSources", {

method: "POST",

body: JSON.stringify({ question }),

});

const sources = await response.json();

setSources(sources);

// Send the question and sources to a new endpoint

const answerStream = await fetch("/api/getAnswer", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ question, sources }),

});

}

// ...

}

We can use the ChatCompletionStream helper from Together’s SDK to read the stream and update our answer state with each new chunk:

// app/page.tsx

import { ChatCompletionStream } from "together-ai/lib/ChatCompletionStream";

function Page() {

const [answer, setAnswer] = useState("");

async function handleSubmit(e) {

e.preventDefault();

const response = await fetch("/api/getSources", {

method: "POST",

body: JSON.stringify({ question }),

});

const sources = await response.json();

setSources(sources);

// Send the question and sources to a new endpoint

const answerResponse = await fetch("/api/getAnswer", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ question, sources }),

});

const runner = ChatCompletionStream.fromReadableStream(answerResponse.body);

runner.on("content", (delta) => setAnswer((prev) => prev + delta));

}

// ...

}

Our new React state is ready!

Let’s update our UI to display it:

function Page() {

let [question, setQuestion] = useState("");

let [sources, setSources] = useState([]);

async function handleSubmit(e) {

//

}

return (

<>

<form onSubmit={handleSubmit}>

<input

value={question}

onChange={(e) => setQuestion(e.target.value)}

placeholder="Ask anything"

/>

</form>

{/* Display the sources */}

{sources.length > 0 && (

<div>

<p>Sources</p>

<ul>

{sources.map((source) => (

<li key={source.url}>

<a href={source.url}>{source.name}</a>

</li>

))}

</ul>

</div>

)}

{/* Display the answer */}

{answer && <p>{answer}</p>}

</>

);

}

If we try submitting a question, we’ll see the sources come in, and once our getAnswer endpoint responds with the first chunk, we’ll see the answer text start streaming into our UI!

The core features of our app are working great.

Digging deeper

We’ve built out the main flow of our app using just two endpoints: one that blocks on an API request to Bing, and one that returns a stream using Together’s Node SDK.

React and Next.js were a great fit for this app, giving us all the tools and flexibility we needed to make a complete full-stack web app with secure server-side logic and reactive client-side updates.

TurboSeek is fully open-source and has even more features like suggesting similar questions, so if you want to keep working on the code from this tutorial, be sure to check it out on GitHub:

https://github.com/Nutlope/turboseek/

And if you’re ready to add streaming LLM features like the chat completions we saw above to your own apps, sign up for Together AI today, get $5 for free to start out, and make your first query in minutes!

Updated 8 months ago